The story of Linux so far, as short as it may be in the grand scheme of things, is one of constant forward momentum. There’s always another feature to implement, an optimization to make, and of course, another device to support. With developer’s eyes always on the horizon ahead of them, it should come as no surprise to find that support for older hardware or protocols occasionally falls to the wayside. When maintaining antiquated code monopolizes developer time, or even directly conflicts with new code, a difficult decision needs to be made.

Of course, some decisions are easier to make than others. Back in 2012 when Linus Torvalds officially ended kernel support for legacy 386 processors, he famously closed the commit message with “Good riddance.” Maintaining support for such old hardware had been complicating things behind the scenes for years while offering very little practical benefit, so removing all that legacy code was like taking a weight off the developer’s shoulders.

Of course, some decisions are easier to make than others. Back in 2012 when Linus Torvalds officially ended kernel support for legacy 386 processors, he famously closed the commit message with “Good riddance.” Maintaining support for such old hardware had been complicating things behind the scenes for years while offering very little practical benefit, so removing all that legacy code was like taking a weight off the developer’s shoulders.

The rationale was the same a few years ago when distributions like Arch Linux decided to drop support for 32-bit hardware entirely. Maintainers had noticed the drop-off in downloads for the 32-bit versions of their distributions and decided it didn’t make sense to keep producing them. In an era where even budget smartphones are shipping with 64-bit processors, many Linux distributions have at this point decided 32-bit CPUs weren’t worth their time.

Given this trend, you’d think Ubuntu announcing last month that they’d no longer be providing 32-bit versions of packages in their repository would hardly be newsworthy. But as it turns out, the threat of ending 32-bit packages caused the sort of uproar that we don’t traditionally see in the Linux community. But why?

An OS Without a Legacy

To be clear, there hasn’t been an official way to install Ubuntu on a 32-bit computer in some time now. Since version 18.04, released in April of 2018, Ubuntu has only provided 64-bit installers. Any alternative methods of getting a newer version than that running on legacy hardware was unsupported and done at the user’s own risk.

Since ending support for installing on 32-bit hardware went fairly painlessly last year, Ubuntu concluded that this year it would make sense to pull the plug on providing packages for the outdated architecture as well. All modern software is either developed for 64-bit hardware specifically, or at the very least, can be compiled and run on a 64-bit machine. So as long as users made sure all of their packages were updated to the latest versions, there should be no problem.

This is a perfectly reasonable assumption because, on the whole, Linux has no concept of “legacy” software. Software developed for Linux is generally distributed as source code, which is then compiled by the distribution maintainers and provided to end users through a package management system. Less commonly, the end user might download the source themselves and compile it locally. In either event, regardless of how old the software itself is, the user ends up with a binary package tailored to their specific distribution and architecture.

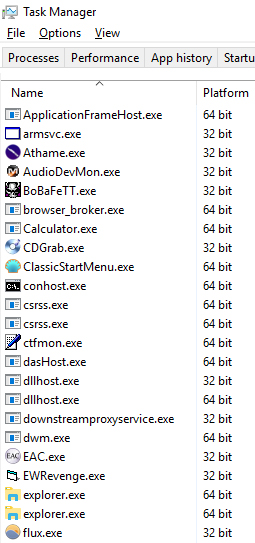

Put simply, the average Linux user in 2019 should rarely find themselves in a situation where they’re attempting to run a 32-bit binary on a 64-bit machine. This is in stark contrast with Windows users, who tend to get their software as pre-compiled binaries from the developer. If the developer hasn’t kept their software updated, then there’s a very real chance it will remain a 32-bit application forever.

For this reason, Windows will allow seamless integration of 32-bit software for many years to come. But for Linux, this capability is not nearly as important. Most distributions do provide the ability to run 32-bit binaries on a 64-bit installation through a concept known as multlib, but it’s not uncommon to find this capability disabled by default to reduce installation size. It is this multilib capability the community was worried they’d be losing when Ubuntu announced they would no longer be providing 32-bit packages. Support for 32-bit hardware had already come and gone, but support for 32-bit libraries was something different altogether.

Gamers Rise Up

So if there’s no extensive back catalog of 32-bit Linux software to worry about, then who really needs multilib support? For the user that’s running nothing but packages from their distribution’s official repository, it’s typically unnecessary. But Ubuntu, arguably the world’s most popular Linux operating system, is something of a special case.

Being so popular, Ubuntu is often the distribution of choice for the recent Windows convert, and has also become the defacto home to gaming on Linux thanks to official support from Valve’s Steam game distribution platform. This means the average Ubuntu user is far more likely to try to run 32-bit Windows programs through WINE, or Steam games which have never been updated for 64-bit, than somebody running a more niche distribution.

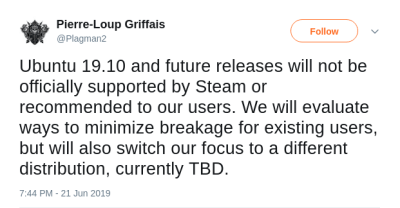

When WINE and Steam developers heard there was a possibility that Ubuntu would drop the 32-bit libraries their respective projects relied upon, both camps released statements saying that they would have to reevaluate their position on the distribution going forward. Ubuntu users were understandably concerned, and their very vocal displeasure at this potential scenario created a flurry of articles from all corners of the tech-focused media.

32-Bit Lives On, For Now

With the looming threat that WINE and Steam would no longer support their distribution, it only took a day or two for the Ubuntu developers to adjust their course. In a post to the official Ubuntu Blog, the developers make it clear that they never intended to completely disable the ability to run 32-bit “legacy” programs on future versions of Ubuntu. But more to the point, they promised to work closely with the teams behind WINE and Steam to make sure that any and all 32-bit libraries their software needs will remain part of the Ubuntu package repositories for the foreseeable future. For now, a crisis seems to have been averted.

But for how long? At what point will it be acceptable to finally remove the ability to natively run 32-bit software from modern operating systems? This story started something of an internal debate here at the Hackaday Bunker. In an informal poll, a few of us didn’t even know if our Linux systems had the packages installed to run 32-bit binaries, and only one of us could name the exact application they use that requires the capability. Still others argued that virtualization has already made it unnecessary to run such software natively, and that any code so far behind the curve should probably be run in a VM from a security standpoint to begin with.

When was the last time one of our penguin-powered readers had to invoke multilib to run something that wasn’t a closed-source game or Windows program through WINE? If you’re running Windows, do you give any thought to what architecture the various programs on your machine are actually compiled for? Let us know in the comments.